Introduction by Jim Koch -

I have actually spent a lot of time over the last few weeks

with the person I have the honor to introduce next. That

is Al Hoagland. Today’s program would not have been

possible without Al's vision and perspective and ability to convene

old friends and individuals that can offer such a rich perspective

on this magnificent industry. Rey Johnson's name has been

mentioned. Al actually joined Rey Johnson's new San Jose

lab in 1956 to head research on magnetic disk recording.

Al received his doctorate in Electrical Engineering at Berkeley

where he taught in the faculty there and pursued research in digital

magnetic recording prior to joining IBM. In 1957 Al started

two research projects in the IBM San Jose laboratory. The

first research project focused on single disk drives while the

second focused on small scale magnetic strip files or more commonly

referred to as replaceable cartridge technology. He developed

for track following servo techniques high track density, back

then that were used later throughout the disk drive industry.

He also investigated longitudinal and perpendicular digital magnetic

recording techniques and their basic head designs. In 1959

became head of engineering science for advanced magnetic storage

technology. His work then expanded to include signal processing

for magnetic recording channels and air bearing design for controlling

the spacing between magnetic heads and disk surfaces. Al

has been instrumental, as others have mentioned, in the formation

of university centers in storage technology, including our center

here at Santa Clara University. He is a fellow IEEE, past

president of IEEE Computer Society and a trustee of the Charles

Babbage Foundation for the History of Computing. He has

written numerous articles on the scientific and technological

underpinnings of this industry, including a book in its second

edition, Digital Magnetic Recording.

Al it is a pleasure to welcome you here today

AL HOAGLAND

|

This early RAMAC prototype model was intended to demonstrate that you could get a lot of magnetic surface area if you pack disks close together, showing the advantage of a disk stack implementation. |

|

Shows is the flying head, which generates a self acting bearing from the pressure generated from the boundary layer of air on the disk through the contour design and keeping the head off the surface. |

|

Shows ADF chief engineer, Al Shugart, who obviously is adjusting the positioning of the module in the disk drive to personally insure that there will never be any failures. |

|

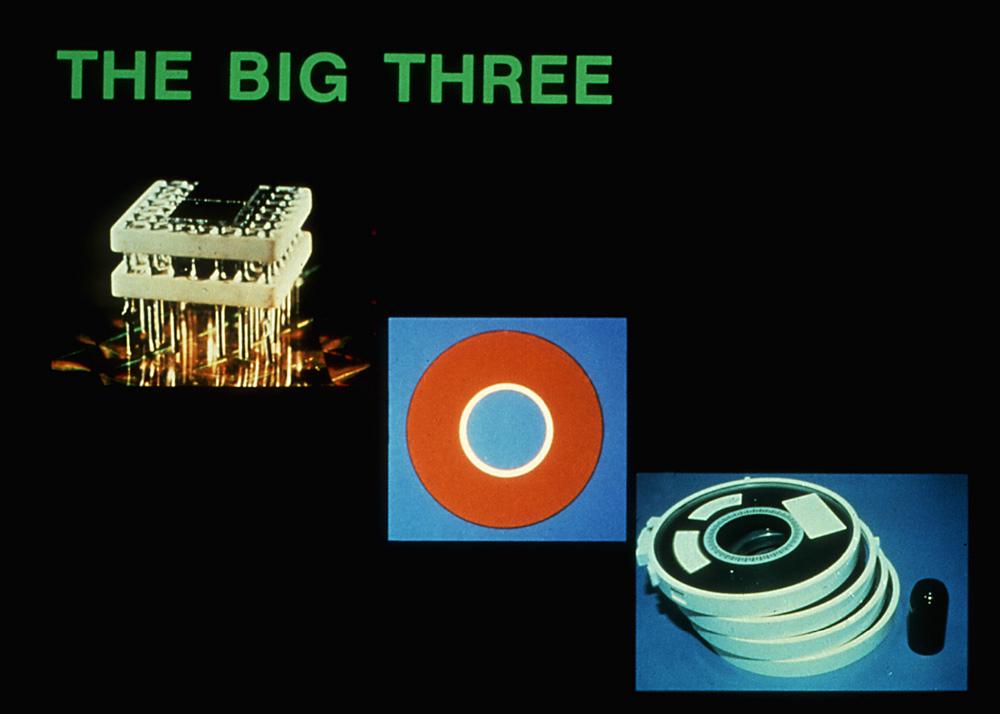

This picture, symbolizing the three dominant memory storage technologies, goes back many years and the message it conveys is still true today, even though it was made more than two decades ago. |